|

Keywords: Web security, JavaScript, same origin policy, cross-site scripting, cross-site request forgery, cross-domain information flow

Current web pages are more than collections of static information: they are a synthesis of code and data often provided by multiple sources that are assembled and run in the browser. Users generally trust the web sites they visit; however, external content may be untrusted, untrustworthy, or even malicious. Such malicious inclusions can initiate drive-by downloads [23], misuse a user's credentials [13], or even initiate distributed denial-of-service attacks [20].

One common thread in these scenarios is that the browser must communicate with web servers that normally wouldn't be contacted. Those servers may be controlled by an attacker, may be victims, or may be unwitting participants; whatever the case, information should not be flowing between the user's web browser and these sites.

In this paper, we propose a policy for constraining communications and inclusions in web pages. This policy, which we call Same Origin Mutual Approval (SOMA), requires the browser to check that both the owner of the page and the third party content provider approve of the inclusion before any communication is allowed (including adding anything to a page). This ``tightening'' of same origin policy prevents attackers from loading malicious content from arbitrary web sites and restricts their ability to communicate sensitive information. While attacks such as cross-site scripting are still possible, with SOMA they must be mounted from domains trusted by the originating domain. Because attackers have much less control over this small subset than they do over other arbitrary hosts on the Internet, SOMA can prevent the exploitation of a wide range of vulnerabilities in web applications.

In addition to being effective, SOMA is also a practical proposal. To participate in SOMA, browsers have to make minimal code changes and web sites must create small, simple policy files. Sites and browsers participating in SOMA can see benefits immediately, while non-participating sites and browsers continue to function as normal. These characteristics facilitate incremental deployment, something that is essential for any change to Internet infrastructure.

We have implemented SOMA as an extension for Mozilla Firefox 2, one that can be run in any regular installation of the Firefox browser. In testing with this browser and simulated SOMA policy files for over 500 main pages on different sites, we have found no compatibility issues with current web sites. The policy files for these sites have been, with only a few exceptions, extremely easy to create and cause no compatibility issues. Simulated attacks are also appropriately blocked. To retrieve policy files, SOMA requires an extra web request per new domain visited. As we explain in Section 5, such overhead is minimal in practice. For these reasons, we argue that SOMA is a practical, easy to adopt, and effective proposal for improving the security of the modern web.

The remainder of this paper proceeds as follows. Section 2 gives background on current web security rules and attacks on modern web pages. Section 3 details the proposed Same Origin Mutual Approval design, which we then evaluate in Section 4. Our prototype and the results of testing in the browser are described in Section 5. We discuss some alternative JavaScript security proposals and other related work in Section 6. Section 7 concludes.

Web browsers are programs that regularly engage in extensive cross-domain communication. In the course of a user viewing a web page, they will retrieve images from one server, advertisements from another, and post a user's responses to a third. In this way the browser serves as a dynamic, cross-domain communications nexus. While such promiscuity may be permissible when combining static data, to maintain security, restrictions must be placed upon executable content.

JavaScript has two main security features that limit the potential damage of malicious scripts; the sandbox and the same origin policy. The sandbox prevents JavaScript code from affecting the underlying system (assuming there are no bugs in the implementation) or other web browser instances (including other tabs). Each page is contained within its own sandbox instance. The same origin policy [28] helps to define what can be manipulated within this sandbox and how sandboxed code can communicate with the outside world. The same origin policy is designed to prevent documents or scripts loaded from one ``origin'' from getting, or setting properties of, content loaded from a different origin (with a special case involving subdomains). The origin is defined as the protocol, port, and host from which the content originated. While scripts from different origins are not allowed to access each other's source, the functions in one script can be called from another script in the same page even if the two scripts are from different domains. JavaScript code has different access restrictions depending on the type of content being loaded. For example, it can fetch (make a request for) HTML, but it can only read and modify the information it gets as a result if the HTML falls within the same origin. These restrictions are summarized in Table 1.

Any script included onto a page inherits the origin of that page. This means that if a page from http://example.com includes a script from advertiser.com, this script is considered to have the origin http://example.com. This allows the script to modify the web page from example.com. It is important to note that many scripts, including scripts dealing with embedding advertisements, require this ability. The script cannot subsequently read or manipulate content originating from advertiser.com directly; it can only read and manipulate content from example.com, or content which has inherited that origin.

While the sandbox and same origin policy protect the host and prevent many types of network communication, opportunities for recursive script inclusion, unrestricted outbound communication, cross-site request forgery, and cross-site scripting allow considerable scope for security vulnerabilities. We explore each of these issues below.

A page creator could choose to include content only from sources they deem trustworthy, but this does not mean that all content included will be directly from those sources. Any script loaded from a ``trustworthy'' domain can subsequently load content from any domain. Unfortunately, trust is not transitive, even if JavaScript treats it that way. Besides the risk of an intentionally malicious script loading additional, dangerous code, there is also the accidental risk of a ``trustworthy'' domain inadvertently loading malicious content. Even well-known, legitimate advertising services have been tricked into distributing malicious code [29,25].

While the same origin policy restricts how content from another domain can be used, it does not stop any content from other domains from being requested and loaded into the origin site. These requests for content can be abused to send information out to any arbitrary domain.

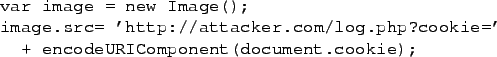

One common attack involves cookie-stealing. A script reads cookie information from the user's browser and uses it as part of the URL of a request. This request could be for something innocuous, such as an extra image, as shown in Figure 1.

Such cookie information could then be used by the remote server to gain access to the user's session, or to get other information about the user. Any information that can be read from the document could be sent out in a similar manner, including credit card information, personal emails, or username and password pairs. Even if a user does not hit ``submit'' on a form, any information they enter can be read by JavaScript and potentially retransmitted.

While no precise definition of cross-site scripting seems to be universally accepted, the core concept behind cross-site scripting (XSS) is that of a security exploit in which an attacker inserts code onto a page returned by an unsuspecting web server [1,2]. This code may be stored or reflected, it may contain JavaScript or just HTML, and it may use third party sites as sources or rely only upon the resources of the targeted server. With such ambiguity, it is possible to have a cross-site scripting attack which neither uses scripting nor is cross-site. Typically, however, XSS attacks involve JavaScript code engaging in cross-domain communication with malicious web servers.

Code injection for cross-site scripting usually occurs because user-inputted data is not sufficiently sanitized before being stored and/or displayed to other users. Although the existence of such vulnerabilities is not a flaw in the same origin policy, per se, the same origin policy does allow the injected code access to content of the originating site. Specifically, it can then steal information associated with that domain or perform actions on behalf of the user.

Some existing proposals to address cross-site scripting and other JavaScript security issues are described in greater detail in Sections 6. Here we note that no current proposal targets the cross-domain communication involved in most JavaScript exploits.

The Same Origin Mutual Approval (SOMA) policy aims to tighten the same origin policy so that it can better handle exploits as discussed in Section 2, including cross-site scripting and cross-site request forgery. SOMA requires that both the origin web site and the site providing included content approve of the request before the browser allows any external content to be fetched for a page. Adding these extra checks gives site operators much more control over what gets included into or from their sites. These changes are shown in Table 2. While the differences (relative to Table 1) are all in the Fetch column, a ``fetch'' can also be used to leak (send out) information such as cookies, as discussed earlier.

| |||||||||||||||||||||||||||||||||||

A key idea behind SOMA is that security policy should be decided by site operators, who have a vested interest in doing it correctly and the knowledge necessary to create secure policies, rather than end users. Having said that, we cannot expect site operators to create complex policies--their time and resources are limited. Thus SOMA works at a level of granularity that is both easy to understand and specify, that of DNS domains and URLs.

We assume that site administrators have the ability to create and control top-level URLs (static files or scripts) and that web browsers will follow the instructions specified at these locations precisely. In contrast, we do assume that the attacker controls arbitrary web servers and some of the content on legitimate servers (but not their policy files or their server software). Our goal is to prevent a web browser from communicating with a malicious web server when a legitimate web site is accessed, even if the content on that site or its partners has been compromised.

These assumptions mean that we do not address situations where an attacker compromises a web server to change policy files, compromises a web browser to circumvent policy checks, or performs intruder-in-the-middle attacks to intercept and modify communications. Further, we do not address the problem of users visiting malicious web sites directly, say as part of a phishing attack. While these are all important types of attacks, by focusing on the problem of unapproved communication we can create a simple, practical solution that addresses the security concerns we described in Section 2. Mechanisms to address these other threats largely complement rather than overlap with the protections of SOMA (see Section 6).

For example, the manifest file for maps.google.com would be found at http://maps.google.com/soma-manifest and might appear similar to Figure 2. If this file was set, the browser will enforce that only content from those locations could be embedded in a page coming from maps.google.com. Note that each location definition includes protocol, domain and optionally port (the default one for the protocol is used if none is specified), so that origins are defined the same way as they are in the current same origin policy.

If the origin ![]() has a manifest that contains

has a manifest that contains ![]() , we denote this using

, we denote this using

![]() . This symbol is chosen as a visual way to indicate that

. This symbol is chosen as a visual way to indicate that

![]() is the origin (the outer cup) and

is the origin (the outer cup) and ![]() is a content

provider web site for that origin (the inner circle).

Similarly, if

is a content

provider web site for that origin (the inner circle).

Similarly, if ![]() 's manifest does not include

's manifest does not include ![]() , we denote that

as

, we denote that

as

![]() . If

. If

![]() then the then browser will not

allow anything in the pages from

then the then browser will not

allow anything in the pages from ![]() to contact the domain

to contact the domain ![]() , thus

code, images, iframes, or any other content will not be loaded from

, thus

code, images, iframes, or any other content will not be loaded from ![]() .

.

By convention, it is not necessary to include the origin domain itself in the manifest file as inclusions from the origin are assumed to be allowed.

The approval files provide the other side of the mutual approval by allowing domains to indicate sites which are allowed to include content from them. SOMA approval files are similar in function to Adobe Flash's crossdomain.xml [4] but differs in that it is not a single static file containing information about all approved domains. Instead, it is a script that provides a YES/NO response given a domain as input.

We use a script to prevent easy disclosure of the list of approved domains, since such a list could be used by an attacker (e.g. to determine which sites could be used in a XSRF attack or to determine business relationships). Attackers could still generate such a list by constantly querying soma-approval, but if they knew a list of domains to guess, they could just as easily visit those domains and see if they included any content from the target content provider. In addition, the smaller size of the approval responses containing simple YES/NO answers may provide a modest performance increase on the client side relative to the cost of loading a complete list of approved sites (especially for highly connected sites such as ad servers).

To indicate that A.com is allowed to load content from B.org, B.org needs

to

provide a script with the filename

/soma-approval which returns YES when invoked through

http://B.org/soma-approval?d=A.com.

Negative responses can be indicated in a similar manner with the

text of NO.

If a negative response is received, then the browser refuses to load

any content from B.org into a page from A.com. If no file with the name

soma-approval exists, then we assume a default permissive

behavior, described in greater detail in Section 3.6.

To reject all approval requests, soma-approval need only be a static file containing the string NO. Similarly, a static soma-approval with the word NO suffices to approve all requests.

An alternative proposal that avoids the need for a script involves allowing soma-approval to be a directory containing files for the allowed domains. Unfortunately, in order to handle our default permissive behavior, we would now require two requests: one to see if the soma-approval directory exists and another to see if the domain-specific file exists. Since most of the overhead of SOMA lies in the network requests (as shown in Section 5), we believe the better choice is to require a script.

A sample soma-approval script, written in PHP, is shown in Figure 3. This script uses an array to store policy information at the top of the file then outputs the policy as requested, defaulting to NO if no policy has been defined. In this example, A.com and C.net are the only approved domains.

The symbols used for denoting approval are similar to those used for

denoting inclusion in the manifest. If ![]() approves of content from

its site being included into a page with origin

approves of content from

its site being included into a page with origin ![]() we show this using

we show this using

![]() . Again, since

. Again, since ![]() is the content provider it is

connected to the small inner circle, and the origin

is the content provider it is

connected to the small inner circle, and the origin ![]() is connected

to the outer cup. If

is connected

to the outer cup. If ![]() does not approve of another domain

does not approve of another domain ![]() , this

is denoted using

, this

is denoted using ![]() . If

. If ![]() then the browser

will refuse to allow the page from

then the browser

will refuse to allow the page from ![]() to contact

to contact ![]() in any way. No

scripts, images, iframes or other content from

in any way. No

scripts, images, iframes or other content from ![]() will be loaded for

the web page at

will be loaded for

the web page at ![]() .

.

It is important to note that ![]() is not the same as, nor

does it necessarily imply, that

is not the same as, nor

does it necessarily imply, that ![]() . It is

possible for one party to allow the inclusion and the other to refuse.

Content is only

loaded if both parties agree (i.e.

. It is

possible for one party to allow the inclusion and the other to refuse.

Content is only

loaded if both parties agree (i.e.

![]() ).

).

The additional constraints added by SOMA are illustrated in

Figure 5. Rather than allowing all inclusions as

requested by the web page, the modified browser checks first to see if

both the page's web server and the external content's web server

approve of each other. In Figure 5, web server ![]() is

the source of the web page to be displayed.

is

the source of the web page to be displayed. ![]() has a manifest that

indicates that it approves of including content from both

has a manifest that

indicates that it approves of including content from both ![]() and

and ![]() (

(![]() and

and ![]() ). When the browser is asked to include content from

). When the browser is asked to include content from ![]() in the page from

in the page from ![]() , it makes a request to

, it makes a request to ![]() to determine if

to determine if ![]() (

(![]() approves of

approves of ![]() incorporating its content). In the

example,

incorporating its content). In the

example, ![]() approves and its content is included on the page (since

approves and its content is included on the page (since

![]() ). Also in the example,

). Also in the example, ![]() 's

content is not included because

's

content is not included because ![]() (

(![]() returns

NO in response to a request for /soma-approval).

returns

NO in response to a request for /soma-approval).

![]() 's content is not included because

's content is not included because

![]() (

(![]() is not

listed in

is not

listed in ![]() 's manifest).

's manifest). ![]() returning

returning ![]() prevents pages from

prevents pages from ![]() accessing content from

accessing content from ![]() in any way (including embedding content or

performing cross-site request attacks).

in any way (including embedding content or

performing cross-site request attacks).

![]() prevents

web pages from

prevents

web pages from ![]() interacting with

interacting with ![]() in any way.

in any way.

In the example, ![]() 's web pages are trying to use content without

's web pages are trying to use content without

![]() 's approval,1 or

's approval,1 or ![]() 's web pages may be attempting a cross-site request

forgery against

's web pages may be attempting a cross-site request

forgery against ![]() . In either case, the browser does not allow the

communication.

. In either case, the browser does not allow the

communication.

In the case of content inclusions from ![]() , the page is trying to

include content but the manifest for

, the page is trying to

include content but the manifest for ![]() does not include

does not include ![]() . The

content from

. The

content from ![]() is thus not loaded and not included (the web browser

never checks to see if

is thus not loaded and not included (the web browser

never checks to see if ![]() would have granted approval or not). In

this fashion SOMA prevents information from being sent to or received

from an untrusted server.

would have granted approval or not). In

this fashion SOMA prevents information from being sent to or received

from an untrusted server.

The process the browser goes through when fetching content is

described in Figure 6. First, the web browser gets

the page from server ![]() . In parallel, the browser retrieves the

manifest file from server

. In parallel, the browser retrieves the

manifest file from server ![]() using the same protocol (i.e. if the

page is served over HTTPS, then the manifest will be retrieved over

HTTPS as well). In this example, the web page requires content from

web server

using the same protocol (i.e. if the

page is served over HTTPS, then the manifest will be retrieved over

HTTPS as well). In this example, the web page requires content from

web server ![]() , so the browser first checks to see if

, so the browser first checks to see if ![]() is in

is in ![]() 's

manifest. If

's

manifest. If

![]() , then the content is not loaded. If

, then the content is not loaded. If ![]() , then the browser verifies

, then the browser verifies ![]() 's reciprocal approval by

checking the /soma-approval details on

's reciprocal approval by

checking the /soma-approval details on ![]() (again using the

same protocol as the pending content request). If

(again using the

same protocol as the pending content request). If ![]() then the browser again refuses to load the content. If

then the browser again refuses to load the content. If ![]() then the browser gets any necessary content from

then the browser gets any necessary content from ![]() and inserts it

into the web page.

and inserts it

into the web page.

Note that these checks are independent, i.e., the lack of a soma-manifest does not prevent the loading of a soma-approval file and vice-versa.

One key factor making SOMA a feasible defense is that the costs of implementation and operation are borne by those parties who stand the most to benefit and who are most suited to bear its costs. It also helps those who wish more control over what sites embed their content.

While SOMA provides no protection against local covert communications channels, it does protect against most timing attacks based upon cached content [11], simply because with SOMA the attacker's website will in general not be approved by the victim's for content inclusion.

Cross-site request forgery attacks occur when a malicious web site causes a URL to be loaded from another, victim web site. SOMA dictates that URLs can only be loaded if a site has been mutually approved, which means that sites are only vulnerable to cross-site request forgery from sites on their approval lists. Specifically, the approval files limit the possible attack vectors for a cross-site request forgery attack, while the manifest file ensures that an origin site cannot be used in an attack on another arbitrary site.

SOMA thus allows a new approach to protect web applications from cross-site request forgery. Any page which performs an action when loaded could be placed on a subdomain (by the server operator) which grants approval only to trusted domains, such as those they control. This change would limit attacks to cases where the user has been fooled into clicking on a link. It is unlikely that sites will need to grant external access to action-causing scripts: even voting sites, which generally want to make it easy for people to vote from an external domain with just a click, usually include some sort of click-through to prevent vote fraud.

SOMA also leaks less information to sites than the current Referer HTML header (which is also sometimes used to prevent cross-site request forgery [22]). Because the Referer header contains the complete URL (and not just the domain), sensitive information can currently be leaked [19]. Many have already realized the privacy concerns related to the Referer URL and have implemented measures to block or change this header [35,30]. These proposals also prevent current cross-site request forgery detection attempts; however, they do not conflict with SOMA.

Even if attack code manages to load, its communication channels are limited. Many attacks require that information such as credit card numbers be sent to the attacker for later use, but this will no longer be possible with SOMA. Other attacks require the user to load dangerous content hosted externally, and these would also fail.

Thus, while some forms of cross-site scripting attacks remain viable, they are limited to attacks on the existing page that do not require communication through the browser to other non-approved domains. For example, it is not possible to steal cookies if there is no way to send the cookie information out to the attacker. It is possible that the site itself could provide the way (for example, cookies could be emailed out of a compromised webmail client or posted on a blog). Or, the attacker could instead choose to deface the page, since this attack requires the script only to modify the page. However, without the cross-site component, the remaining attacks are just single site code injection attacks, not cross-site attacks.

SOMA allows content providers more control over who uses their content. Thus SOMA offers a new way to prevent ``bandwidth theft'' where someone is including images or other content from a (non-consenting) content provider into their page using a direct link to the original file. Existing techniques usually require the web server to verify the HTTP referer header, which can be problematic (as discussed in Section 4.1.3). SOMA provides a technique to do the verification in the browser, not relying on HTTP referer.

Also known as hotlinking or inline linking, bandwidth theft is used maliciously by phishing sites, but may also be used unintentionally by people who do not know better. Regardless of the intent, this can still be damaging. While the content provider is paying hosting costs associated with serving up that file, it may be pulled in by, for example, a very popular blog or aggregate site that would generate a huge number of additional views. At the extreme, this could result in the content provider exceeding their bandwidth cap and being charged extra hosting fees or having their site shut down.

Many smaller sites would rather their content be used only by them for visitors to their site, and SOMA allows them to specify this and have browsers enforce this behavior. Bandwidth theft is often performed by people who are simply unaware that this is inappropriate behavior [6], and SOMA can address this since doing the wrong thing will simply not work.

If only the origin site has a soma-manifest, then SOMA still provides partial security coverage, enforcing the policy that is defined in the soma-manifest. If the origin site does not have a manifest file, but the content provider gives approval information through soma-approval then the policy defined by the content provider is enforced.

In order to verify that files returned in response to requests for soma-manifest and soma-approval are related to SOMA, we stipulate that the first line of the soma-manifest file must contain SOMA Manifest and the soma-approval file must contain only the word YES or NO. This is necessary since many websites return a generic page even when the request has not been found, and this must not be confused with intentional responses to SOMA requests.

The full benefits of SOMA are available when origins and content providers both provide SOMA-related files, but the design is such that it is possible for either side to start providing files without needing extensive coordination to ensure that both are provided at the same time. In other words, incremental deployment is possible. In addition, even if one site refuses to provide policy files for whatever reason, others can still obtain lesser security guarantees. Moreover, the support of SOMA at servers need not be synchronized with deployment of SOMA at browsers.

A more security-oriented default policy would be possible, with SOMA assuming a NO response if the manifest or approval files are not found by the browser. This could potentially provide additional security even on sites which do not provide policy, as well as encouraging sites which do not have policies to set them. However, it would break almost all existing web pages, almost surely preventing the adoption of SOMA. The permissive default was chosen to reflect current browser behavior and to make it easier for SOMA to be deployed.

This list can be determined by looking at pages on the site and compiling a list of content providers. This could be automated using a web crawler, or done by an admin who is willing to set policy. (It is possible that sites will wish to set more restrictive policy than the site's current behavior.) We examined the main page on popular sites to determine the approximate complexity of manifests required, and these results are detailed in Section 5.5.2.

Many sites will not wish to be external content providers and their needs will be easily served by a soma-approval file that just contains NO. Such a configuration will be common on smaller sites such as personal blogs. It will also be common on high-security sites such as banks, who want to be especially careful to avoid XSRF and having their images used by phishing sites. Phishing sites will have to copy over images, facilitating legal action over copyright violations.

Other sites may wish to be content providers to everyone. Sites such as Flickr and YouTube that wish to allow all users to include content will probably want to have a simple YES policy. This is most easily achieved by simply not hosting a soma-approval file (as this is the default), or by creating one that contains the word YES.

The sites requiring the most configuration are those who want to allow some content inclusions rather than all or none. For example, advertisers might want to provide code to sites displaying their ads. The list of domains that need to be approved is already maintained, as this is part of their client list. This database could then be queried to generate the approval list. Or a company with several web applications might want to keep them on separate domains but still allow interaction between them. Again, the necessary inclusions will be known in advance and necessary policy could be created by a system administrator or web developer.

For an evaluation of the performance impact of SOMA, see Section 5.5.3.

SOMA does not stop attacks to or from mutually approved partners. In order to avoid these attacks, it would be necessary to impose finer-grained control or additional separation between components. This sort of protection can be provided by the mashup solutions described in Section 6, albeit at the cost of extensive and often complex web site modifications.

SOMA cannot stop attacks on the origin where the entire attack code is injected, if no outside communication is needed for the attack. This could be web page defacement, same-site request forgery, or sandbox-breaking attacks intended for the user's machine. Some complex attacks might be stopped by size restrictions on uploaded content. More subtle attacks might need to be caught by heuristics used to detect cross-site scripting. Some of these solutions are described in Section 6.

SOMA cannot stop attacks from malicious servers not including content from remote domains. These would include phishing attacks where the legitimate server is not involved.

The SOMA add-on provides a component that does the necessary verification of the soma-manifest and soma-approval files before content is loaded.

Since it is not possible to insert policy files onto sites over which we had no control, we used a proxy server to simulate the presence of manifest and approval files on popular sites.

The primary overhead in running SOMA is due to the additional latency introduced by having to request a soma-manifest or soma-approval from each domain referenced on a web page. While these responses can be cached (like other web requests), the initial load time for a page is increased by the time required to complete these requests. Because the manifest can be loaded in parallel with the origin page (subsequent requests can not be sent until the browser has received and parsed the origin page anyway), we do not believe manifest load times will affect total page load times. Because soma-approval files must retrieved before contacting other servers, however, overhead in requesting them will increase page load times.

Because sites do not currently implement SOMA, we estimate SOMA's overhead using observed web request times. First, we determined the average HTTP request round-trip time for each of 40 representative web sites2on a per-domain basis using PageStats [9]. We used this per-domain average as a proxy for the time to retrieve a soma-approval from a given domain. Then, to calculate page load times using SOMA, we increase the time to request all content from each accessed domain by the soma-approval request estimate for that domain. The time of the last response from any domain then serves as our final page load time.

After running our test 30 times on 40 different web pages, we found that the average additional network latency overhead due to SOMA increased page load time from 2.9 to 3.3 seconds (or 13.28%) on non-cached page loads. On cached page loads, our overhead is negligible (since soma-approval is cached). We note that this increase in latency is due to network latency and not CPU usage. If we assume that 58% of page loads are revisits [32], the average network latency overhead of SOMA drops to 5.58%.

Because soma-approval responses are extremely small (see Section 5.5.3), they should be faster to retrieve than the average round-trip time estimate used in our experiments. Thus, these values should be seen as a worst-case scenario; in practice, we expect SOMA's overhead to be significantly less.

In order to verify that SOMA actively blocks information leakage, cross-site request forgery, cross-site scripting, and content stealing, we created examples of these attacks. We specifically tested the following attacks with the SOMA add-on:

All attacks were hosted at domain ![]() and used domain

and used domain ![]() as the other

domain involved. All attacks were successful without SOMA and we

found that with SOMA either a manifest at domain

as the other

domain involved. All attacks were successful without SOMA and we

found that with SOMA either a manifest at domain ![]() not listing

not listing ![]() or a soma-approval at domain

or a soma-approval at domain ![]() which returned NO

for domain

which returned NO

for domain ![]() prevented the attacks.

prevented the attacks.

To determine approximate sizes for manifests, we used the PageStats add-on [9] to load the home page for the global top 500 sites as reported by Alexa [3] and examined the resulting log, which contains information about each request that was made. On average, each site requested content from 5.45 domains other than the one being loaded, with a standard deviation of 5.3. The maximum number of content providers was 32 and the minimum was 0 (for sites that only load from their own domain).

Of course, a site's home page may not be representative of its entire contents. So, as a further test we traversed large sections of a major news site and determined that the number of domains needed in the manifest was approximately 45; this value was close to the 33 needed for the site's home page.

Given the remarkable diversity of the Internet, there probably exist sites today that would require extremely large manifest files. This cursory survey, however, gives evidence that manifests for common sites would be relatively small.

Approvals result in tiny amounts of data being transferred: either a YES or NO response (around 4 bytes of data) plus any necessary headers.

Using data from the top 500 Alexa sites [3], we examined 3244 cases in which a content provider served data to an origin site. The average request size was 10459 bytes. Because many content providers are serving up large video, however, the standard deviation was fairly large: 118197 bytes. The median of 2528 bytes is much lower than the average. However, even this smaller median dwarfs the 4 bytes required for a soma-approval response. As such, we feel it safe to say that the additional network load on content providers due to SOMA is negligible compared to the data they are already providing to a given origin site.

Web-based execution environments have all been built with the understanding that unfettered remote code execution is extremely dangerous. SSL and TLS can protect communication privacy, integrity, and authenticity; code signing [27,31] can prevent the execution of unauthorized code; neither, however, protect against the execution of malicious code within the browser. Java [8] was the first web execution environment to employ an execution sandbox [34] and the same origin policy for restricting network communication. Subsequent systems for executing code within a browser, including JavaScript, have largely followed the model as originally embodied in Java applets.

While there has been considerable work on mitigating the failures of language-based sandboxing [17] and on sandboxing other, less trusted code such as browser plugins and helper applications [12], only recently have researchers begun addressing the limitations of sandboxing and same origin policy with respect to JavaScript applications.

Many researchers have attempted to detect and block malicious JavaScript. Some have proposed to instrument JavaScript automatically to detect known vulnerabilities [26], while others have proposed to filter JavaScript to prevent XSS [18] and XSRF [16] attacks. Another approach has been to perform dynamic taint tracking (combined with static analysis) to detect the information flows associated with XSS attacks [33]. Instead of attempting to detect dangerous JavaScript code behavior before it can compromise user data, SOMA prevents the unauthorized cross-domain information flows using site-specific policies.

Recently several researchers have focused on the problem of web mashups. Mashups are composite JavaScript-based web applications that draw functionality and content from multiple sources. To make mashups work within the confines of same origin policy, remote content must either be separated into separate iframes or all code must be loaded into the same execution context. The former solution is in general too restrictive while the latter is too permissive; mashup solutions are designed to bridge this gap. Two pioneering works in this space are Subspace [15] and MashupOS [14].

SOMA prevents the creation of mashups using unauthorized code, i.e., in order for a mashup to work with SOMA, every web site involved in it must explicitly allow participation. While such restrictions may inhibit the creation of novel, third party mashup applications, they also prevent attackers from creating malicious mashups (e.g., combinations of a legitimate bank's login page and a malicious login box). Given the state of security on the modern web, we believe such a trade-off is beneficial and, moreover, necessary.

While the general problem of unauthorized information flow is a classic problem in computer security [10], little attention has been paid in the research community to the problems of unauthorized cross-domain information flow in web applications beyond the strictures of same origin policy--this, despite the fact that XSS and XSRF attacks very heavily rely upon such unauthorized flows. Of course, the web was originally designed to make it easy to embed content from arbitrary sources. With SOMA, we are simply advocating that any such inclusions should be approved by both parties.

While SOMA is a novel proposal, we based the design of soma-approval and soma-manifest on existing systems. The soma-approval mechanism was inspired by the crossdomain.xml [4] mechanism of Flash. External content may be included Flash applications only from servers with a crossdomain.xml file [4] that lists the Flash applications' originating server. Because the response logic behind a soma-approval request can be arbitrarily complex, we have chosen to specify that it be a server-side script rather than an XML file that must be parsed by a web browser.

The soma-manifest file was inspired by Tahoma [7], an experimental VM-based system for securing web applications. Tahoma allows users to download virtual machine images from arbitrary servers. To prevent these virtual machines from contacting unauthorized servers (e.g., when a virtual machine has been compromised), Tahoma requires every VM image to include a manifest specifying what remote sites that VM may communicate with.

Note that by themselves Flash's crossdomain.xml and Tahoma's server manifest do not provide the type of protection provided by SOMA. With Flash, a malicious content provider can always specify a crossdomain.xmlfile that would allow a compromised Flash program to send sensitive information to the attacker. With Tahoma, a malicious origin server can specify a manifest that would cause a user's browser to send data to an arbitrary web site, thus causing a denial-of-service attack or worse. By including both mechanisms, we address the limitations of each.

Most JavaScript-based attacks require that compromised web pages communicate with attacker-controlled web servers. Here we propose an extension to same origin policy--the same origin mutual approval (SOMA) policy--which restricts cross-domain communication to a web page's originating server and other servers that mutually approve of the cross-site communication. By preventing inappropriate or unauthorized cross-domain communication, attacks such as cross-site scripting and cross-site request forgery can be blocked.

The SOMA architecture's benefits versus other JavaScript defenses include: 1) it is incrementally deployable with incremental benefit; 2) it imposes no configuration or usage burden on end users; 3) the required changes in browser functionality and server configuration affect those who have the most reason to be concerned about security, namely the administrators of sensitive web servers and web browser developers; 4) these changes are easy to understand, simple to implement technically, and efficient in execution; and 5) it gives server operators a chance to specify what sites can interact with their content. While SOMA does not prevent attackers from injecting JavaScript code, with SOMA such code cannot leak information to attackers without going through an approved server.

We believe that SOMA represents a reasonable and practical compromise between benefits (increased security) and costs (adoption pain). Perhaps more significantly, our proposal of the SOMA architecture highlights that the ability to create web pages using arbitrary remote resources is a key enabling factor in web security exploits (including some techniques used in phishing). While other JavaScript defenses will no doubt arise, we believe that among the contributions of this paper are a focus on the underlying problem, namely, deficiencies in the JavaScript same origin policy, and the identification of several important characteristics of a viable solution.

It is easy to dismiss any proposal requiring changes to web infrastructure; however, there is precedence for wide scale changes to improve security. Indeed, much as open email relays had to be restricted to mitigate spam, we believe that arbitrary content inclusions must be restricted to mitigate cross-site scripting and cross-site request forgery attacks. We hope this insight helps clarify the threats that must be considered when creating next-generation web technologies and other Internet-based distributed applications.